Need a policy for using ChatGPT in the classroom? Try asking students

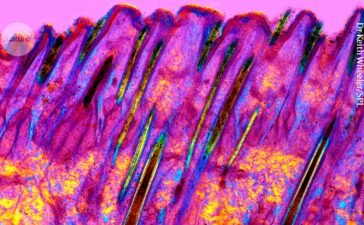

The use of AI chatbots in universities has been a topic of hot debate — but students rarely have any say in how use policies are crafted.Credit: Gabby Jones/Bloomberg/Getty

I don’t like ChatGPT. I’ve never asked it to craft recipes based on the leftovers in my fridge, write cover letters or generate songs about post-structuralism in the style of Beyoncé. Although I hear it’s great for those things, I’ve so far managed to avoid using the generative artificial intelligence (genAI) chatbot.

But I haven’t been able to avoid thinking about it: since its launch, the AI tool has been the focus of heated debates between academics who advocated for the benefits of using genAI technology in their writing; educators who were troubled by the ethics of how it was developed; and university leaders who struggled to provide straightforward guidance around how to use it in teaching. Everyone, it seems, is feeding into policies about ChatGPT — except students, whose perspectives are seldom sought by university staff.

Collection: ChatGPT’s impact on careers in science

Students are on the receiving end of AI-use policies, but rarely have agency over how they are developed. So, despite my personal reservations about the technology, I decided to develop an AI-use policy for my course alongside my students. Here’s what happened.

An open discussion

My background is in documentary film and cultural geography, and I teach in the Human Geography Program at Te Herenga Waka—Victoria University of Wellington. My students are interested in understanding how power operates in the world, concerned about the dire impacts of the climate crisis and committed to decolonization and social justice, and have an awareness of their own responsibilities in relation to these themes.

All of these topics are connected to contemporary debates about AI use, so I decided to have my students think about what an ethical-use policy for the technology might constitute. My class is large, so I held discussions with each of my five smaller tutorial groups. I asked the students to choose one of three assigned readings (available in the supplementary information) about the challenges and ethical implications of AI use and development, and to reflect on them in the context of the course’s themes. They had to decide whether they thought they should be able to use AI — and if so, how — and explain their reasoning in a blogpost, which they submitted before the group discussions.

Careers Collection: Publishing

Unsurprisingly, the students identified several parallels between key course themes and the ethical implications surrounding AI. They questioned how much agency authors had in having their work used to train highly profitable AI algorithms, and said they didn’t want to contribute to the exploitation of others. The students were also concerned about ChatGPT’s tendency to promulgate racist and sexist content, reflecting the public data sources on which it was trained. They considered the complicity of AI technologies in reproducing harm through extraction of data and natural and human resources, as well as perpetuating unequal wealth distribution. And they discussed the human toll of AI, exemplified by an investigation last year by Time, which reported that OpenAI used people in precarious working conditions in Kenya to classify explicit text-based content to generate labelled examples of sexual abuse, hate speech and violence. This work was essential to make the chatbot safer for users, but failed to consider the traumatic effects of exposure to such content on the workers.

Maja Zonjić involved her students in crafting an AI-use policy for her course.Credit: Maja Zonjić

Students also reflected on the many benefits of AI, including its use in coding, proofreading, developing ideas, simplifying complex theories and summarizing academic articles. Students suggested that such applications might be particularly useful for people whose first language is not English, as well as those who experience difficulties with information processing and learning.

We reflected on AI platforms’ potential to make outcomes for first-generation learners more equitable, but noted that these tools can also exacerbate existing inequalities because wealthier students can afford to access higher-quality, subscription-based software, such as the GPT-4o model. None of the students who contributed to the open discussion wanted to use genAI technologies to do their assignments for them, and those who planned to use the tools for assistance gained an increased understanding of the their limitations and propensity for creating misinformation.

Key lessons

Considering the students’ reflections, I decided that AI-based tools could be used for writing and research support in my course, but the onus was on the students to discern which tools and content were appropriate and accurate.

Students are not allowed to generate large sections (such as entire paragraphs) of assignments, and if they upload other authors’ copyrighted works into an AI platform to generate a summary, they have to adjust the privacy and data-control settings to opt out of allowing the content to be used to train AI algorithms. Students who use AI in their assignments must provide a brief statement indicating how and why they used the technology, and can choose to discuss the ethical dilemmas inherent in doing so.

Several weeks after our AI-policy co-development workshop, I asked the students what they thought about the exercise. Their responses were mostly positive: they said they now thought more critically about AI use, appreciated having agency over course parameters and enjoyed hearing other students’ perspectives and having the opportunity to engage with them. Students were strongly in favour of me repeating the initiative next year.

The exercise gave me an excellent opportunity to consider how AI systems might be used effectively in the classroom. Involving students emphasized the value of their experiences with AI and challenged assumptions about who gets to ‘hold knowledge’ or ‘have expertise’ in academic spaces. Moreover, debating with students about how to use AI tools ethically is important: it demonstrates the complex relationships between the people who develop, profit from, build and use new technologies. The approach highlights the fact that nothing about these entanglements is ‘objective’ — regardless of how easy it is to launch ChatGPT on a computer screen. It also acknowledges that we all have responsibilities towards each other, despite the national borders that separate us or which part of the worker–user spectrum we fall on.

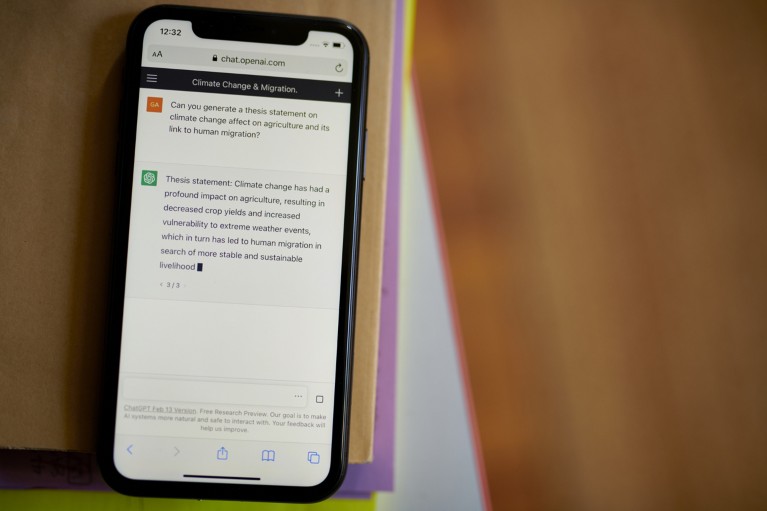

As I completed this column, I asked ChatGPT (based on GPT-3.5) how I, as a university lecturer, might develop an ethical AI-use policy. The chatbot outlined a well-written 11-step process in just under 500 words. None of the steps involved asking my students for feedback — a poignant illustration of one of ChatGPT’s most significant shortcomings: chatbots collate and repackage how things have been done before, but cannot imagine how things could be done better or more equitably. Perhaps that’s a uniquely human aspiration.

Competing Interests

The author declares no competing interests.