What we do — and don’t — know about how misinformation spreads online

Online misinformation contributed to the 6 January 2021 Capitol riots.Credit: Samuel Corum/Getty

“The Holocaust did happen. COVID-19 vaccines have saved millions of lives. There was no widespread fraud in the 2020 US presidential election.” These are three statements of indisputable fact. Indisputable — and yet, in some quarters of the Internet, hotly disputed.

They appear in a Comment article1 by cognitive scientist Ullrich Ecker at the University of Western Australia in Perth and his colleagues, one of series of articles in this issue of Nature dedicated to online misinformation. It is a crucial time to highlight this subject. With more than 60% of the world’s population now online, false and misleading information is spreading more easily than ever, with consequences such as increased vaccine hesitancy2 and greater political polarization3. In a year in which countries home to some four billion people are holding major elections, sensitivities around misinformation are only heightened.

Misinformation poses a bigger threat to democracy than you might think

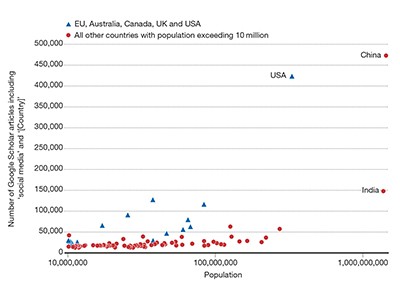

Yet common perceptions about misinformation and what well-grounded research tells us don’t always agree, as Ceren Budak at the University of Michigan School of Information in Ann Arbor and her colleagues point out in a Perspective article4. The degree to which people are exposed tends to be overestimated, as does the influence of algorithms in dictating this exposure. And a focus on social media often means that wider societal and technological trends that contribute to misinformation are ignored.

The message from researchers is that misinformation can be curbed. But for that to happen, platforms and regulators must take action, and evidence on how misinformation spreads and why must be collected from diverse societies across the world.

The role of social-media platforms in abetting the spread of misinformation is shown in a research article5 by David Lazer, a political and computer scientist at Northeastern University in Boston, Massachusetts, and his colleagues. They analysed the activity of more than 550,000 Twitter users during the 2020 US presidential election cycle. Their findings fit with the view that overall exposure to misinformation is overstated: only 7.5% of users shared one or more pieces of misinformation during the study period.

This period included the attack on the US Capitol on 6 January 2021, after which Twitter deplatformed 70,000 users deemed to be trafficking misinformation. The authors show that this move coincided with a huge drop in the sharing of misinformation. It is hard to know whether the ban modified user behaviour directly, or whether the violence at the Capitol had an indirect effect on the likelihood of users sharing narratives about the ‘stolen’ 2020 presidential election that fuelled it. Either way, the team writes, the circumstances constituted a natural experiment that shows how misinformation can be countered by social-media platforms enforcing their terms of use.

Read the paper: Misunderstanding the harms of online misinformation

Such experiments currently look unlikely to be repeated. As Lazer tells Nature, he and his colleagues were lucky to be collecting data ahead of and during the attack, and to be doing so at a time when Twitter was permissive in the data it allowed scientists to extract. Since its takeover by entrepreneur Elon Musk, the platform, now rebranded X, has not only reduced content moderation and enforcement, but also limited researchers’ access to its data.

There is a similar opacity in another, even less-well-studied part of the misinformation ecosystem: its funding. Misinformation wasn’t created by the Internet or social media, but the advert-funded model of much of the web has boosted its production. For example, automated advertising exchanges auction off ad space to companies according to which sites — including misinformation sites — people are looking at, and the sites receive a cut if users look at and click on ads.

In a second research article6, Wajeeha Ahmad, a doctoral candidate at Stanford University in California, and her colleagues show that companies are ten times more likely to wind up advertising on misinformation sites if they advertise using exchanges. Although firms can follow up on where their ads are placed, most advertising decision makers underestimate their involvement with misinformation — and consumers are similarly unaware.

Is AI misinformation influencing elections in India?

In Ahmad’s words, online advertising is “happening kind of in the dark”: as with social media, the bulk of the data needed to understand how misinformation spreads are held by online platforms. If platforms are conducting interventions of their own to try to curb the spread of misinformation, that is happening away from public scrutiny.

The first step to tackling misinformation must be for companies to engage more with researchers. The studies that have already been performed show that it is possible to collaborate ethically on data while ensuring people’s privacy. Moreover, taking steps against the spread of provable falsehoods does not amount to a curb on freedom of speech if it is done transparently. If companies are not willing to share data, regulators should compel them to do so.

The rise of generative artificial-intelligence (AI) applications, which reduce the barrier to producing dubious content, is another reason to tackle the issue urgently. As Kiran Garimella at Rutgers University in New Brunswick, New Jersey, and Simon Chauchard at University Carlos III in Madrid point out in a Comment article7, their studies of users of the WhatsApp messaging app in India indicate that generative AI content does not seem to be prevalent in the misinformation mix as yet — but from what we know about how the use of technology evolves, it seems likely that it is only a matter of time.

The world has a shared interest in curbing the spread of misinformation and keeping public debate focused on issues of evidence and fact. Which curbing measures work and for whom must be tested — and first, independent researchers need access to the data that will allow society to make informed choices.